Using Docker to host multiple website containers on one server

I am relatively new to using Docker, and I wanted to share and also make note of some useful information for myself on how to setup a few containers to allow my server to host multiple website containers. I know there may be better and more efficient ways to create the end result, one of which is just using one container or server to host multiple virtual host (domains). The idea behind this project is to allow the ability to utilize Docker on a large server to host various kinds of containers for Java development, but at the same time host some of the various websites on that same server that have low traffic but not have to pay to host on other services like BlueHost.

Overview of test environment

To test the proof of concept, I am using a MacBook Pro (2014) with macOS High Sierra (10.13.5), a 2.6 GHz Intel i5 with 8GB memory. I have Docker for Mac Community Edition Version 18.06.0 installed. I will edit my /etc/hosts file to point a fake domain name to my local box via a local IP address and then also test through my router’s IP address with port forwarding enabled. I have two containers, one for the proxy server and one to host the WordPress website (actually it is two containers, one for the MySQL database and one for the Apache web services. So a total of three containers really).

Setup DNS or Hosts file

After this proof of concept works, the end goal is to setup one of my domains to point to my home router utilizing a dynamic dns service. This service watches for when my router’s IP address changes with my ISP and corrects the domain registry appropriately to match. I could do this manually by editing my domain registration, but what fun would that be. (Truthfully, it is very rare that my router’s IP address changes but this is just in case in the middle of the night my IP address lease expires and my ISP decides to change my IP). Another way around this would be to pay for a static IP address from your ISP, but that can be cost prohibitive.

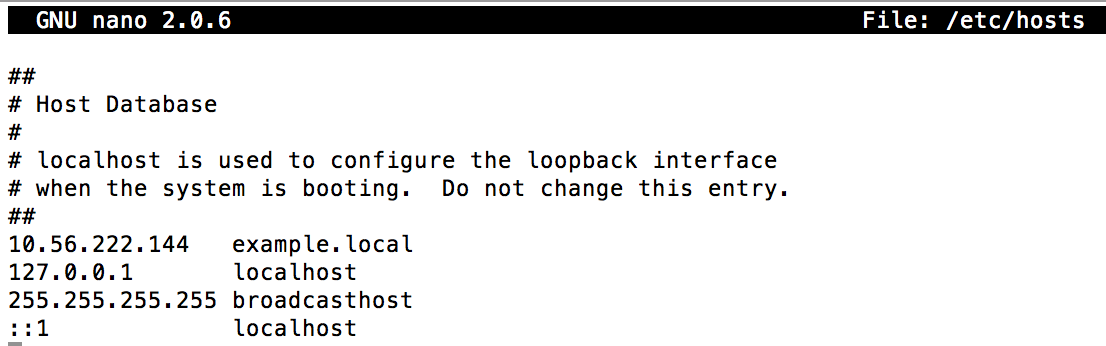

Before I go through the trouble of setting up my real domain with the dynamic dns service, I can test the concept by editing my Hosts file. If you are unfamiliar with what a Hosts file is or does, you may want to research before proceeding further as changes to this file can adversely affect your connection to the Internet. On my Mac, the Host file is located at /etc/hosts and the file can only be edited by a user with admin rights. This is where knowledge of the command line and how to use the Nano program come in handy. In terminal, edit the file by typing sudo nano /etc/hosts . When done with changes, you can save and exit by CTRL+o to save and CTRL+x to exit. Look at the existing line entries for how you should enter the data. There is usually a tab character between the IP address and the domain name. I did an ifconfig in terminal mode to get my local IP address. There are many other ways to find this information out. For an example of how to test this process from outside your network, see the section near the end of this post titled “Configure test settings to mimic traffic to your modem”. For now, we will test with an internal IP address. I added my IP address and the test domain of example.local to the file and saved. See image.

Setup Docker network

In order for the proxy server container and the web server containers to see each other in Docker, they MUST all be on the same network. When using Docker Compose to setup containers, any services in the same file will be on the same network by default, however I am running separate compose files for each of my containers due to other workflow considerations and compose creates a different network for each file. (I utilize separate containers and compose files for backup testing of various websites and it is easier to share individual zip files of each website to co-workers). So in order for all the containers to be on the same network, it was easier to create a network outside of the Docker compose files. From a terminal window type docker network create my-network to create a new network called my-network. We will use this network in the compose files.

Setup the Proxy container

There is a very simple to setup and use container by JWilder called nginx-proxy. You can run the container directly, however I prefer to setup a Docker Compose file so that I can have all my settings in one easy to edit file and it is also easier to teach co-workers how to startup and run the various containers and not have any real knowledge of how Docker works. I created a separate directory in my working directory called nginx-proxy and saved the following as a file in that directory called docker-compose.yml

version: '3.3'

services:

nginx-proxy:

image: jwilder/nginx-proxy

ports:

- "80:80"

volumes:

- /var/run/docker.sock:/tmp/docker.sock:ro

networks:

default:

external:

name: my-networkTo start the proxy server, open terminal and enter into the directory the file is located and type docker-compose up -d The -d is so that you can return to a command line and the docker container will run in the background.

Setup one web server container

Next we need to setup a web server container on the same network as the proxy server. Create a new folder in your working directory called example-local and create a new file called docker-compose.yml with the following text

version: '3.3'

services:

db:

image: mysql:5.7

restart: always

environment:

MYSQL_ROOT_PASSWORD: somewordpress

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

wordpress:

depends_on:

- db

image: wordpress:latest

expose:

- "80"

restart: always

environment:

WORDPRESS_DB_HOST: db:3306

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

VIRTUAL_HOST: example.local

networks:

default:

external:

name: my-networkNotice that the last grouping for networks is the same in this container as well as the proxy container. Also very important to notice that one of the environment labels has one for VIRTUAL_HOST This line is very important as the proxy container is watching for any containers that are started that have this variable set and will configure the web services to point to this container when that domain address is used. Also note that port 80 is exposed in order for the proxy server to talk to the web container.

Open terminal and enter into the folder where this file is located and type docker-compose up -d just as you did for the proxy container.

Test the services

Hopefully, after allowing about a minute for the web container to start you can open up a web browser and type the fake domain name you created. Type http://example.local into your browser. The very first time I did this, it took about a minute before the web page loaded, but then subsequent page loads were very quick. I am not sure if it was something with my computer, or my wireless router on my network or if it was something that the proxy server had to do on its end to get the process going. However, after testing this information again from the beginning the process went very smoothly and the responsiveness from the site was quick. I did try my Firefox browser this time, so maybe it is Chrome slowing down the first time loading and nothing to do with docker or the containers.

Note that when you first go to the web address you will be see the “5 Minute Install” for WordPress (which really is less than a minute). For testing purposes, you can use a simple username and password and a fake email address.

Shutting down the containers

When you are done, you can shut down the containers a couple of ways. If you use the temporary shutdown process you can restart the containers and all your website information will still be available. If you do the full shutdown, then the container will be cleared out and you will have to create a whole new website. There is a simple way to keep the data for the website, but it is outside the point of this post. To learn more, look up using volumes in Docker Compose file.

To do a temporary shutdown and maintain your data, from the terminal inside of the folder you started the container (for example, the example-local folder) issue the following command docker-compose stop and this will stop the container. To restart the container use the command docker-compose start.

To do a full shutdown and clean up the containers, from each folder where you issued the command to start each container (for example the nginx-proxy folder), issue the command docker-compose down This will clean up any network settings and container settings and free up some memory, just know you will loose any work you did in the running container without using volumes.

A Very Important Note

There is an order to starting the containers. You must start the nginx-proxy server first. This container is the one that watches for new containers and sets up the configurations to allow the use of domain names to point to the various web servers containers you start.

Configure test settings to mimic traffic to your modem

Now that the settings for testing on the internal network is functional, I want to mimic the process of using the dynamic dns service to access my web services from outside my network.

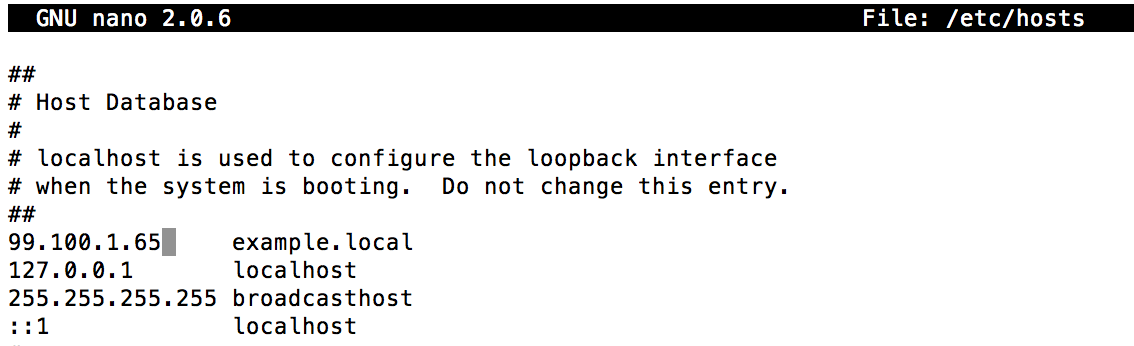

First, we need to find out what the external IP address of your modem is. I like to use What Is My IP website and look for the second line that gives you the IPv4 address. We will use that address and add it to our /etc/hosts file in replacement of the internal network address. See the image and note that I did not use my real external IP address for the image, however in my hosts file I did use the real external IP address. I did not think it was a good idea to share my real IP address with you in this blog for security reasons.

Next, you need to configure your modem to forward port 80 to your server that is running the Docker containers. You should already have your internal IP address if you followed along above. Since there are numerous different modems out there and each has its own setup, I will not go into how to setup your modem to allow port forwarding. If you are unsure of this process, please study up on how to change your settings and any security implications there may be about opening up ports to your internal network. For many cases, port 80 is fairly safe as long as you are making sure your web containers are updated often and the websites are following all security precautions. I am not a security expert, but it would seem to me that if your port 80 is hacked, the attacker is limited to the Docker container itself. However, the attacker could change your internal website to be used for other nefarious purposes. Be safe and do your research.

Next, start up the nginx-proxy server as above in this article. At this stage of the test if you go to your browser and type http://example.local , you should get a 503 Error Service not available message. If not, you may check that you server allows port 80 traffic. For example, by default my Mac has port 80 blocked by the Mac’s firewall. I had to disable the firewall for this test. I am sure there is a better way to adjust my Mac’s firewall to only allow port 80 and still keep the firewall on, but for testing purposes I did not mind temporarily having the firewall off. As I have said before, be sure you understand ALL security implications of these actions.

Next, start up the example.local web service container. After about 30 seconds to about a minute, go ahead and try the http://example.local website. If you get a 502 Bad Gateway, then there is an issue with your nginx-proxy server. Turns out that I accidentally started a different copy of my nginx-proxy server from earlier testing that was on a different Docker network. Once I shutdown both services and restarted the correct proxy server, everything worked out good.

Troubleshooting

I tested the above on my machine, and all seems to be working fine. I will try a few more changes and see if I can add in some trouble shooting tips later.

I did find that from a terminal window, enter docker stats at the command prompt and a running status report will be shown of all running containers. If you keep a separate window open with the stats running as you start up your containers you can see the cpu and memory usage as they are starting and running. What was helpful for me was to watch the MySQL container as the web service was starting up. As the container starts there is a jump in cpu usage, and when the process of setting up has completed the cpu usage should drop significantly. Once that happens I knew I could visit my webpage as the container should now be ready to receive requests.

Resources

When I originally setup this project, I kept getting Error 503 message when visiting the proxied websites. Kept researching various posts and this one gave me some ideas (or at least I thought they did). I don’t think any of the code above that is now working I figured out or used from this resource, but I am adding in case it helps you out. See this issue from the nginx-proxy github about proxy web container started with docker-compose

What did help me out was looking at the Docker Networking section that pertains to creating “special networks”. Gave me the idea and hope to keep dredging forward in trying to understand why I could not get the proxy server to see the other containers. The answer was to create a network in Docker and then connect each container to that network.

There is a Google Forum used by the developer for the nginx-proxy container that is very helpful. In order to post questions, you will need to have a Google ID and join the group. I did not join the group, but found some of the question and answers posted to be enlightening.

Of course it should go without saying, that for any container you want direct information about, you should visit the Docker Store page for jwilder nginx-proxy.

The blog that really got me thinking that I can do this project and that someone else had success doing it was a blog by Florian Lopes about hosting multiple subdomains on a single Docker host. The best answers you will get are reading through all of the comments on the page!