How to use Python to quickly find most common words on a webpage

Today’s quick python script is all about finding what are the top repeated words within a webpage. The idea for this came to me when I was thinking about processing sites I found on a Google Search and possibly generating a database of common words for each site to help me find useful documents related to one topic. The idea was I would search for sites based on one theme, and within that theme sites tend to talk about a diverse set of topics on that theme. I could then keep my own list of sites for when I needed a quick research tool to look up resources for my educational topics for an upcoming meeting. To get a better idea on what a page on a website was about, I figured a common word usage list may be helpful. So here we go.

Preparation

This is a python script, so I will assume you know how to install and create simple python scripts. This is not a tutorial on creating scripts as there are plenty of resources out there to get you started. The following script was written and tested on a Mac with Python 3.8.1

I do start with creating a virtual environment to work with my scripts so I don’t download packages that may be incompatible with other scripts I write. If you are unfamiliar with virtual environments, check out this website.

Install the following packages with pip: requests and BeautifulSoup

pip install requests

pip install beautifulsoup4

The requests library simplifies our calls to get information from a website, and the BeautifulSoup library helps to process a web page into an easy to search DOM tree.

I did also install the “lxml” and “html5.parser” libraries to work with BeautifulSoup, however they are not required for this script to work. If you want to use the same libraries I used you can save the following as “requirements.txt” and install using pip install -r requirements.txt instead.

requirements.txt

beautifulsoup4==4.10.0 certifi==2021.10.8 charset-normalizer==2.0.10 html5lib==1.1 idna==3.3 lxml==4.7.1 requests==2.27.1 six==1.16.0 soupsieve==2.3.1 urllib3==1.26.8 webencodings==0.5.1

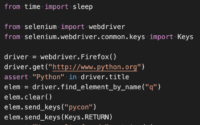

Let’s talk about the imports for this project

I recently learned of the “Requests” project and very excited to explore further. Requests really does simplify the process to make calls out to get data from web servers. I know I will be using this package for my day job where this will decrease the amount of time it will take us to prototype ideas. The package is very easy to use and the documentation is VERY helpful

I have used “BeautifulSoup” for a number of years for my PHP development needs, as it made scraping data from the local public police reports website easy. This package has greatly improved over the years and is my goto for traversing HTML and XML documents. The documentation is VERY helpful and easy to follow along. Just reading the docs alone started the ideas of future projects in my head.

Finally, I never realized the “Counter” package was available by default in the Python version I am currently using (Python 3.8.1). For years I had used my own dictionaries to maintain a listing of words and the corresponding counts. I even needed to use code to check if the word already existed in the dictionary or not in order to increment the counters. Not anymore! The Counter package greatly simplifies the use of a counting dictionary and does all the automated checks for existing value in order to increment. Now I only need one line of code similar to the following: counter[word] += 1

Add the imports

from typing import Counter

import requests

from bs4 import BeautifulSoupStop Words

Stop Words is a term we used when I worked in the Conflicts Dept. for a law firm researching client information to make sure we could take on a new case for a potential client. We needed to search multiple databases of information that included tons of words that are irrelevant to the search. For example, we did not need the following words to show up in our results as they had no relevant meaning to the task: and, that, which, find, done, bank, land, wire, transfer, school, board, etc.

You can decide the words that you do not want to include in the results that are not relevant to your own search needs. I came up with a very short list just for this example that I used in testing against this webpage so that my results would be more or less useful to me. Adjust according to your needs. Add the following to your script

# list of stop words (words not to include)

stop_list = [ "about", "blog", "contact", "find", "full", "have", "list", "need", "news", "their", "with", "your" ]The word counter

This is an easy one, we need to initiate our Counter and assign it to a variable. Add this next to your script.

# prepare a word counter

word_count = Counter()Time to request the web page

The following could be on one line, but I find it helpful to extract the url out to an easy to see variable. If I placed the url in the get function directly, my eyes would easily pass it over when reviewing code. This is just a preference for me. In larger scripts, I would put all my constants up near the top of the page for easy access but for sake of simplicity of describing the code we add it now.

The base_url is simple in this case, as I was running a local copy of a WordPress site on my machine. You can choose to use what ever URL you wish to review. The next line we make a GET request to the URL and store the response in our variable (in this case, simply ‘r’). Add the following code to your script with any personal adjustments you desire.

# lets get our web page (adjust to the url you want to review)

base_url = 'http://localtest.com:8880/'

r = requests.get(base_url)Soup on a cold day?

Next we will parse the response from the webpage. This is made simple with BeautifulSoup. The requests.get() method creates a python object, and we want the text portion of the webpage. This is stored in a “text” variable, so we will pass in ‘r.text’ to our BeautifulSoup() constructor as the first argument. Our second argument is not required if we are using the default parser, however I like to explicitly define now so I do not forget later if I find I want to use ‘lxml’ or ‘html5lib’ instead. We then save the BeautifulSoup generated object into an easy to remember variable, ‘soup’. Add the following to your script:

# parse the webpage into an element hierarchy and store in soup

soup = BeautifulSoup(r.text, 'html.parser')More than alphabet soup, let’s get some words

Now, time to let our BeautifulSoup shine! The package has a method called get_text() that will pull out only the text portion between the html elements. Just note that even the text between <script> tags will be included. I found that i needed to pass in two arguments into this function to get better results from the remainder of the script. I wanted to generate one long String of words, but the first argument needed to make this work was using a space as the Join between the text results. The second argument was needed in order to strip out tons of extra whitespace that could potentially be in the raw html (think of an unminified webpage with lots of tab and space characters for human readability)

In that same line of code, we then force the results of get_text() to be lowercase with the lower() function, and then finally split the text string into individual words with the split() function and saving the array into our variable called “all_words”. I wanted an array of words, as it is very easy to loop through the list and process the results. Note, this array does include multiple copies of same words as they are found in the webpage. This is what we expect, so that when we loop through the array we will use our Counter() function to count the total occurrences of each word.

Add the following to your script:

# Get only the main text of the page as list of words

all_words = soup.get_text(" ", strip=True).lower().split()Loop through all the words and count the ones we care about

This is the meat of the counting portion of the code. I know this is a big chunk to discuss, but I’ll do the best I can. First we setup our For Loop. We want to look at each ‘word’ in the array of ‘all_words’. Note: there will be multiple words repeated in the array, this is what we wanted to start with.

The first thing I do with each word is to strip out any punctuation, like the period, comma and question mark. When I split the soup text above, the punctuation is included in a word it is next to.

Next we check the length of the word with an ‘if’ statement. My choice was to only look at words that were greater than three characters. You may wish to increase it by one to avoid counting four letter words.

If the word is four characters or more we proceed to check if the word is in our Stop List, and if so we use the ‘continue’ command to skip to the next word. We don’t want to count any of the words that were in the soup if they are in our Stop List.

Thus, if the word is at least 4 characters and not in our Stop List then we want to add that word to our count. The variable ‘word_count’ is a Counter object where we can use our current word as a key and tell the counter to add one to the current count. Counter() will check if our key is in it’s listing and add one to the current value, and if not already in the list will add the key and start at one for us. Note: you could manually use your own dictionary to track counts which adds a couple of lines of code. This way, using Counter() we can clean up our code more

Add the following to our script:

#count words

for word in all_words:

cln_word = word.strip('.,?')

# ignore words less 4 char long

if len(cln_word) > 3:

# ignore words in our custom stop list

if cln_word in stop_list:

continue

word_count[cln_word] += 1Print the most common words

This is the final step! We use a special function in our Counter() object that will provide us with the most common words sorted by most to least. in this ‘most_common()’ function, we pass in an integer of how many words to provide. I think 50 works best for me.

Add the following to our script:

# print 50 most common words

print(word_count.most_common(50))Sample set of results

The following is a portion of the output when I run the code against this page (using a slightly modified Stop Word list)

[('python', 14), ('theme', 9), ('requests', 8), ('common', 7), ('install', 7), ('beautifulsoup', 7), ('webpage', 6), ('resources', 6), ('import', 6), ('script', 5), ('website', 5), ('following', 5), ('package', 5), ('counter', 5), ('quickly', 4)]As you can see, you can get a general idea of the topic for this page. Looks like we are talking about a python script, and i see BeautifulSoup is mentioned and the words website and webpage mentioned. I also see the word counter as well. From this I might surmise this has site might be counting something common and using python and the BeautifulSoup library against a webpage or theme.

Full Source Code

from typing import Counter

import requests

from bs4 import BeautifulSoup

# list of stop words (words not to include)

stop_list = [ "about", "blog", "contact", "find", "full", "have", "list", "need", "news", "their", "with", "your" ]

# prepare a word counter

word_count = Counter()

# lets get our web page (adjust to the url you want to review)

base_url = 'http://localtest.com:8880/'

r = requests.get(base_url)

# parse the webpage into an element hierarchy and store in soup

soup = BeautifulSoup(r.text, 'html.parser')

# Get only the main text of the page as list of words

all_words = soup.get_text(" ", strip=True).lower().split()

#count words

for word in all_words:

cln_word = word.strip('.,?')

# ignore words less 4 char long

if len(cln_word) > 3:

# ignore words in our custom stop list

if cln_word in stop_list:

continue

word_count[cln_word] += 1

# print 50 most common words

print(word_count.most_common(50))Troubleshooting

An appropriate representation not found

If you are getting the following html message and a 406 response status:

“An appropriate representation of the requested resource could not be found on this server. This error was generated by Mod_Security.”

and your results look similar to the following:

[('acceptable', 2), ('this', 2), ('appropriate', 1), ('representation', 1), ('requested', 1), ('resource', 1), ('could', 1), ('found', 1), ('server', 1), ('error', 1), ('generated', 1), ('mod_security', 1)]This is likely because you are contacting a server that requires some header information in the GET request, for example:

'accept':'text/html;',

'accept-encoding':'gzip, deflate, br',

'accept-language':'en-US',

'user-agent':'Mozilla/5.0'Check out the Custom Headers section of the Requests package to learn how to add appropriate headers to your GET requests